Create Word Clouds with Word Frequencies

install.packages("tm") # for text mining

install.packages("SnowballC") # for text stemming

install.packages("wordcloud") # word-cloud generator

install.packages("RColorBrewer") # color palettes

Installing package into ‘/usr/local/lib/R/site-library’

(as ‘lib’ is unspecified)

also installing the dependencies ‘NLP’, ‘slam’

Installing package into ‘/usr/local/lib/R/site-library’

(as ‘lib’ is unspecified)

Installing package into ‘/usr/local/lib/R/site-library’

(as ‘lib’ is unspecified)

Installing package into ‘/usr/local/lib/R/site-library’

(as ‘lib’ is unspecified)

library("tm")

library("SnowballC")

library("wordcloud")

library("RColorBrewer")

Loading required package: NLP

Loading required package: RColorBrewer

Let’s find where is the working directory by using the getwd function.

getwd()

‘/content’

# Change the URL

omf <- readLines("OMF.txt", encoding="utf-8")

Warning message in readLines("/content/OMF.txt"):

“incomplete final line found on '/content/OMF.txt'”

# Load the data as a corpus

docs <- Corpus(VectorSource(omf))

inspect(docs)

IOPub data rate exceeded.

The notebook server will temporarily stop sending output

to the client in order to avoid crashing it.

To change this limit, set the config variable

`--NotebookApp.iopub_data_rate_limit`.

Current values:

NotebookApp.iopub_data_rate_limit=1000000.0 (bytes/sec)

NotebookApp.rate_limit_window=3.0 (secs)

toSpace <- content_transformer(function (x , pattern ) gsub(pattern, " ", x))

docs <- tm_map(docs, toSpace, "/")

docs <- tm_map(docs, toSpace, "@")

docs <- tm_map(docs, toSpace, "\\|")

Warning message in tm_map.SimpleCorpus(docs, toSpace, "/"):

“transformation drops documents”

Warning message in tm_map.SimpleCorpus(docs, toSpace, "@"):

“transformation drops documents”

Warning message in tm_map.SimpleCorpus(docs, toSpace, "\\|"):

“transformation drops documents”

We need to curate the data. I didn’t use text stemming, but included code for it.

# Convert the text to lower case

docs <- tm_map(docs, content_transformer(tolower))

# Remove numbers

docs <- tm_map(docs, removeNumbers)

# Remove english common stopwords

docs <- tm_map(docs, removeWords, stopwords("english"))

# Remove your own stop word

# specify your stopwords as a character vector

docs <- tm_map(docs, removeWords, c("blabla1", "blabla2"))

# Remove punctuations

docs <- tm_map(docs, removePunctuation)

# Eliminate extra white spaces

docs <- tm_map(docs, stripWhitespace)

# Text stemming

# docs <- tm_map(docs, stemDocument)

Warning message in tm_map.SimpleCorpus(docs, content_transformer(tolower)):

“transformation drops documents”

Warning message in tm_map.SimpleCorpus(docs, removeNumbers):

“transformation drops documents”

Warning message in tm_map.SimpleCorpus(docs, removeWords, stopwords("english")):

“transformation drops documents”

Warning message in tm_map.SimpleCorpus(docs, removeWords, c("blabla1", "blabla2")):

“transformation drops documents”

Warning message in tm_map.SimpleCorpus(docs, removePunctuation):

“transformation drops documents”

Warning message in tm_map.SimpleCorpus(docs, stripWhitespace):

“transformation drops documents”

Through the sort function, you can sort the data with decreasing or increasing orders. The data need to be organized as a frame to be processed for a word frequency task.

dtm <- TermDocumentMatrix(docs)

m <- as.matrix(dtm)

v <- sort(rowSums(m),decreasing=TRUE)

d <- data.frame(word = names(v),freq=v)

head(d, 10)

| word | freq | |

|---|---|---|

| <chr> | <dbl> | |

| ‘ | ‘ | 5892 |

| ’ | ’ | 2587 |

| said | said | 2169 |

| boffin | boffin | 1035 |

| mrs | mrs | 971 |

| little | little | 872 |

| one | one | 797 |

| upon | upon | 732 |

| know | know | 715 |

| bella | bella | 705 |

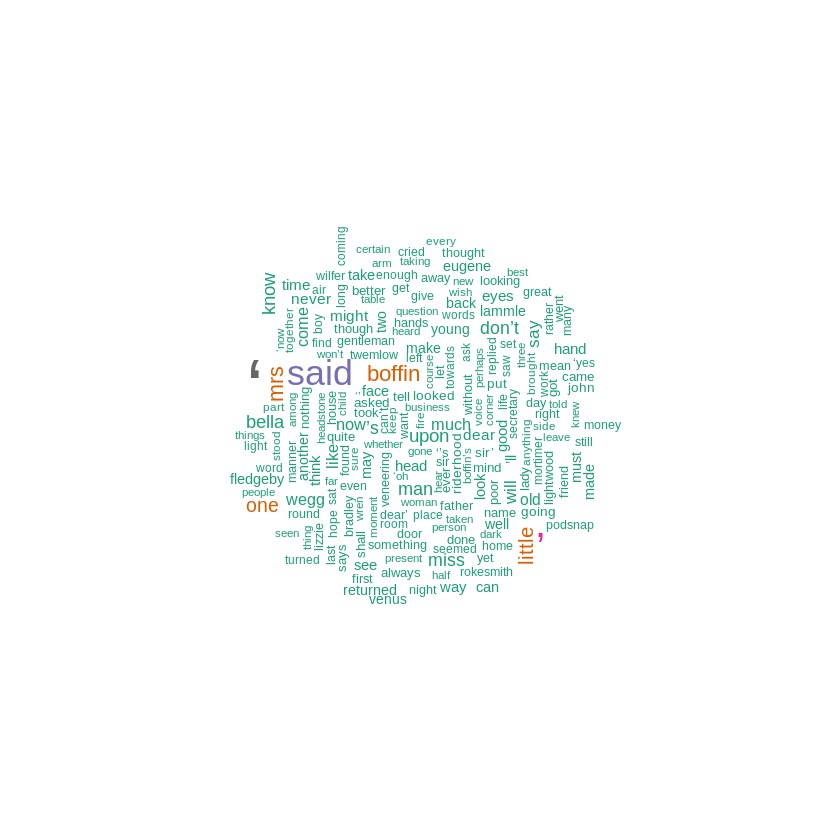

Finally, everything is ready to be visualized. Let’s make a word cloud of word frequencies. You can customize the visualization by changing parameters.

set.seed(1234)

wordcloud(words = d$word, freq = d$freq, min.freq = 5,

max.words=200, random.order=TRUE, rot.per=0.35,

colors=brewer.pal(8, "Dark2"))